Why this matters (and why I’m excited to explain it)

If you’ve been on Twitter/Instagram or poking around AI tools lately, you might have caught a wave of “nano-banana” images — gorgeous, stylized edits of selfies and photos that keep the subject’s likeness while changing clothing, background, pose or even blending multiple photos into one creative composition. Behind that trend is Google’s Gemini 2.5 Flash Image model (nicknamed Nano Banana), and it’s not just a viral filter — it’s a serious step forward for image editing AI. In this post I’ll walk through what it does, how to use it, pricing, safety, prompts that work well, comparisons, and a final verdict.

What is Nano Banana / Gemini 2.5 Flash Image?

Nano Banana is the public nickname for Gemini 2.5 Flash Image, an image generation and editing model from Google (DeepMind). It supports:

- Prompt-based local edits (e.g., “blur background”, “remove person”, “change shoes to sneakers”).

- Multi-image fusion (blend multiple inputs into one coherent image).

- Character consistency (preserves the identity/appearance of people or pets across style changes).

- Conversational iterative editing — you can refine edits with natural language follow-ups instead of rebuilding images from scratch.

Why that’s notable: earlier image models were great at making new pictures, but often failed when asked to preserve the same person across variations. Nano Banana explicitly targets that problem.

Where to use it / availability

You can access the model via:

- Google AI Studio (build mode + demo apps),

- Gemini app (image features integrated into the consumer app),

- Gemini API / Google Cloud / Vertex AI for developers and enterprises.

Google has also partnered with developer platforms to widen access.

Pricing (quick, transparent)

Google published pricing for Gemini 2.5 Flash Image: the model is billed by output tokens. Example pricing noted in the announcement shows $30 per 1M output tokens, with an example cost of about $0.039 per image when each image is ~1290 output tokens — obviously this can vary by resolution, usage pattern, and platform wrappers. If you’re building volume workflows, check the latest API/Studio pricing pages.

Hands-on features & how they feel in real use

Below are the standout features I tested / researched and what using them feels like.

1. Prompt-based targeted edits — feel: surgical

Want to change a shirt’s color, remove a distracting object, or alter a pose? Just tell it. It understands precise instructions better than previous consumer models, so edits often land exactly where you expect.

2. Multi-image fusion — feel: collage that looks real

Drop multiple input images and ask it to merge them (e.g., put a product photo into a lifestyle scene). The fusion keeps lighting and scale consistent most of the time.

3. Character consistency — feel: spooky accurate

This is the headline: when you restyle a person (retro saree look, action figure, different lighting), the model preserves identity cues — eyes, face shape, dominant features — far better than many rivals. That’s why viral trends with consistent stylized selfies exploded quickly.

4. Iterative / conversational editing — feel: easy, non-destructive

Change your mind mid-edit? Say “make the background dusk, not noon” and it will adapt. This removes the need to re-run full generations for small tweaks.

Example prompts that reliably work

(Use these as copy-paste starting points — tweak for voice/style)

- Background swap + lighting:

“Replace the kitchen background with a cozy coffee shop interior; add golden hour lighting and keep subject’s pose unchanged.” - Style transfer while keeping identity:

“Turn this selfie into a 1990s Bollywood portrait — keep facial features, add silk saree and cinematic lighting.” - Multi-image product placement:

“Fuse images A and B: place the watch from image A on the model from image B in a minimalist studio setting.” - Local repair:

“Remove the stain from the white t-shirt and slightly blur the background to bokeh.”

Safety, watermarking & privacy — what to watch for

Google has built-in safeguards and watermarking:

- Every image created/edited with the model includes an invisible SynthID digital watermark to identify AI-generated content. There’s also a visible watermark in some products to flag AI images. That’s an important step for provenance.

But watermarking alone doesn’t solve every issue. The rapid viral trends show how easily people upload personal photos and share edited versions — which raises deep privacy concerns (risk of deepfakes, identity misuse, and unintended artifacts). Several outlets have flagged incidents where edits produced unexpected facial changes or artifacts — a reminder to be cautious with sensitive images.

Practical safety tips

- Don’t upload photos that reveal sensitive info (IDs, children’s faces, intimate photos) unless you’re comfortable with the risk.

- Remove EXIF metadata before uploading.

- For brands/clients: record and retain original files & logs for provenance.

- Use SynthID / watermark metadata where possible to mark AI edits.

Comparison: Nano Banana vs other popular image models

- DALL·E / Midjourney / Stable Diffusion: excellent at art-style generation and fantastical scenes — Nano Banana’s edge is identity consistency and conversational edits.

- Other consumer editors (in-app filters): Nano Banana gives much more granular control (local edits, multi-image fusion) and preserves likeness better.

Real use cases (who will love it)

- Content creators & marketers: fast product shots, lifestyle placements, and consistent brand characters.

- Photographers: quick mockups and iterative edits before a full retouch.

- Developers/companies: build apps that need on-demand image compositions.

- Casual users: fun stylized edits (but be mindful of privacy).

Limitations & when it struggles

- Deep contextual realism: small details (hands, reflections) can still be imperfect.

- Safety filters / blocked content: some prompts get blocked or altered to prevent misuse.

- Ethical risks: identity-preserving edits can be misused; watermarking reduces but does not eliminate risk.

- Costs at scale: $0.039 per image (example) is cheap for small use, but high-volume production needs budgeting.

SEO & content ideas for your DiscoverAITool.com post (so search engines love it)

- Primary keyword: “Nano Banana review” / “Gemini 2.5 Flash Image review”

- Secondary keywords: “Nano Banana tutorial”, “Gemini 2.5 pricing”, “how to use Nano Banana”, “SynthID watermark”

- Suggested H2s: Features, How to use Nano Banana (step-by-step), Pricing, Safety and Privacy, Tips and Prompts, Comparison, Verdict

- Structured data idea: Add

FAQschema around common Qs (Is Nano Banana free? Where to access? Is my photo safe?) - Internal link idea: link to any other AI tool reviews (Stable Diffusion, Midjourney, Gemini app guide) — internal linking helps crawling and authority.

Quick Step-by-Step: Try Nano Banana in Google AI Studio / Gemini app

- Open Google AI Studio or the Gemini mobile app (ensure you are using the latest model version: Gemini 2.5 Flash Image).

- Upload one or more images you want to edit or use as reference.

- Enter a natural language prompt describing the edit (start simple).

- Use mask/localize tools if you want surgical edits.

- Iterate using follow-up prompts (“a little brighter”, “more contrast”), which the model understands conversationally.

- Export and check metadata/watermark as needed.

Verdict — should you use Nano Banana?

Yes — if you’re a creator, marketer, or developer who needs higher-fidelity edits that preserve likeness and want conversational, iterative control. It’s a practical jump forward for real-world editing workflows. However, use responsibly: watermarking helps, but consent, privacy, and ethical use still fall on the user. Budget for usage costs if you scale beyond casual experimentation.

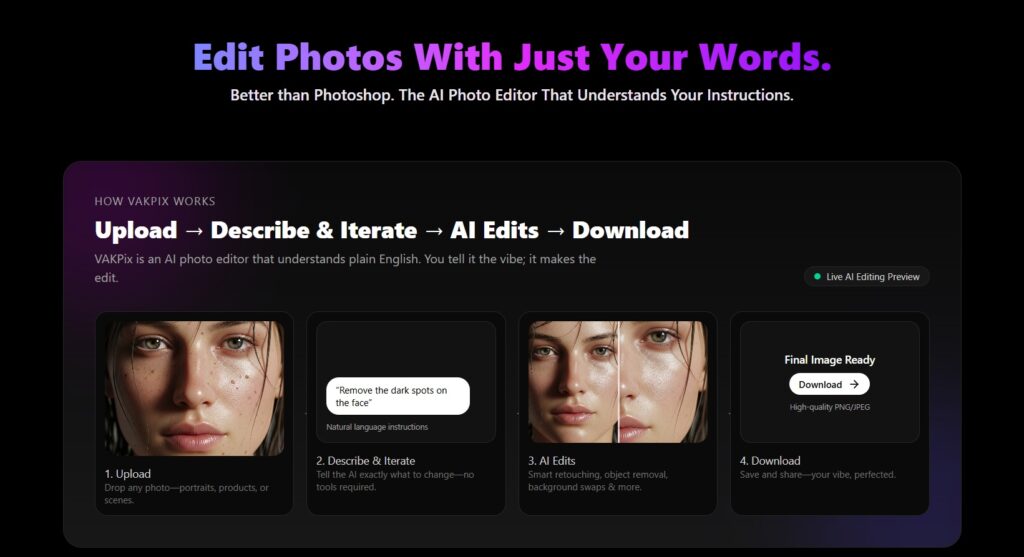

See also: VakPix photo editor